404: Privacy Not Found - ChatGPT Conversations Search Scandal

Picture this: you're spilling your guts to ChatGPT about your messy breakup, your shady business idea, or your secret recipe for grandma's lasagna. You think it's just between you and the bot. Wrong! Turns out, thousands of these "private" conversations were popping up in Google searches, and the privacy activists are screaming “I told you so” and having a field day.

We Love To Share Because We Care?

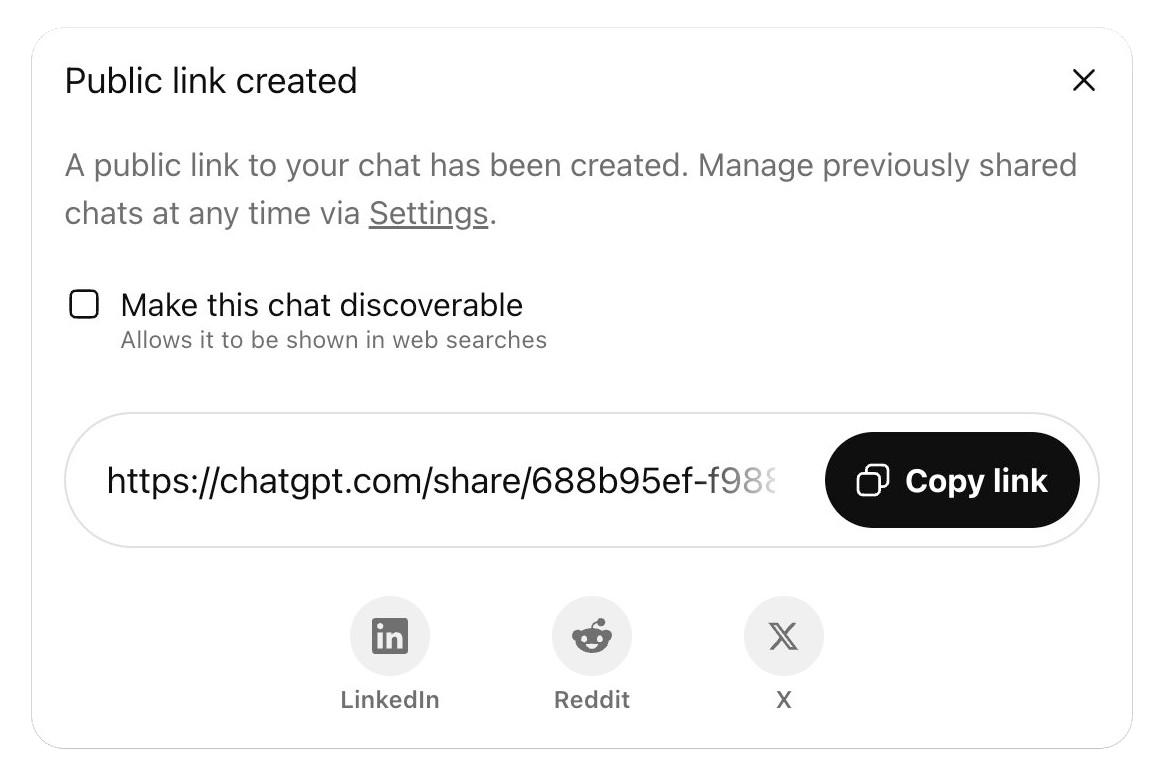

Let’s see how this gets started. It all began with OpenAI's shiny new "Share" feature - it let users create public links to their chats. Maybe you found some inspirations from your ChatGPT conversation and would like to share with a friend? Maybe you like a record of the conversation that you had with ChatGPT saved online somewhere?

OpenAI conveniently provided you an option to do that. However, buried in the fine print, was a tiny checkbox saying “Make this chat discoverable. Allows it to be shown in web searches”. It all fairness to the designer, it was not checked by default. Many users – bless their optimistic souls – checked the box and thought, "well, what's the worst that could happen?". The worst was about to happen.

By late July, privacy researcher like Luiza Jarovsky started yelling from the social media rooftops: "Hey, these chats are on Google!" And just like that, over 4,500 conversations were out there, with potentially up to 100,000 more lurking in archives. Nothing says "user-friendly" like turning your therapy session into public domain.

🚨 SHOCKING: people are unknowingly making their ChatGPT interactions PUBLIC, and they are being indexed by Google (see my test below). My privacy recommendations:

— Luiza Jarovsky, PhD (@LuizaJarovsky) July 31, 2025

When people interact with ChatGPT and use the "Share" feature (for example, to send the conversation to family and… pic.twitter.com/PBsCddfJyv

OpenAI's response? They yanked the feature faster than you can say "oops" – within 24 hours, to be precise. Their security chief, Dane Stuckey, basically admitted, "Yeah, we messed up; this thing was a recipe for accidental oversharing."

We just removed a feature from @ChatGPTapp that allowed users to make their conversations discoverable by search engines, such as Google. This was a short-lived experiment to help people discover useful conversations. This feature required users to opt-in, first by picking a chat… pic.twitter.com/mGI3lF05Ua

— DANΞ (@cryps1s) July 31, 2025

Open Kimono - From Therapy Session to Fraud Schemes Explorations

Now, let's talk about what got leaked, because oh boy, is way beyond your friendly daily banter with your colleague. We're not naming names here – privacy, remember? – but imagine stumbling upon strangers' chats about their battles with depression, PTSD, or that time they vented about their toxic boss. There were resumes spilling personal details, business plans that could make a competitor's day, and even chats about medical woes or romantic disasters. And get this: financial fraud schemes, potential criminal confessions, and corporate espionage plots were all fair game. It's like the AI turned into a confessional booth with a megaphone.

ChatGPT quietly scrubbed today nearly 50,000 shared conversations from Google's index after our investigation. They thought they'd solved the problem. They were wrong. (1/5) pic.twitter.com/cNE7UJdTCd

— 𝚑𝚎𝚗𝚔 𝚟𝚊𝚗 𝚎𝚜𝚜 (@henkvaness) August 1, 2025

Even after OpenAI played cleanup crew and got Google to scrub the results, the Internet Archive's Wayback Machine had snapped up around 100,000 of these chats like a digital hoarder. They're still floating around unless someone bothers to ask nicely for removal. Moral of this chapter? The internet never forgets, even when you beg it to.

Legal Fine Print That Screamed "I dare you to ignore me."

So, how did this comedy of errors unfold? Blame it on a perfect storm of bad design choices. The technical analysis was “poor UX design” with the checkbox being easily overlooked, “insufficient warnings” on the small print regarding web searches, users making “default assumptions” expecting sharing to be limited to intended recipients not global search engines and “lack of friction” as there were no additional dialog box to confirm decision.

“Privacy by design” way forward… for now

In a world where it takes 5 steps with 10 warnings to delete a photo from our phone, AI privacy gets a little less than that. For OpenAI and their AI buddies, this was a wake-up call. Trust took a nosedive, competitors with better privacy flexes goes on the PR offense, and everyone's now triple-checking their features. Regulators? Oh, they're circling like sharks. GDPR folks in Europe are muttering about consent fails and data minimization – basically, "You processed way more than necessary, dummies." In the US, Congress has been poking around laws and regulations on AI privacy and transparency even before this incident. Long-term? Expect more rules, mandatory privacy checks, and AI designs that actually think about users first. Because apparently, "privacy by design" wasn't on the original memo.

But let's not forget us, the users – the real stars (or victims) of this tale. We treat these AI chats like free therapy sessions, dumping our deepest secrets without a second thought. Bots aren't priests; they're code. This leak showed how we skip the fine print, assume privacy is a given, and forget that "deleted" often means "hiding in plain sight." It's funny in a dark way – we're all guilty of clicking "agree" without reading, but when it backfires like this, suddenly we're shocked. The risks? Your data lives forever, easy to expose, and the gap between what we expect and reality is wider than the Grand Canyon.

A Cautionary Tale

Innovation meets idiocy, privacy gets trampled, and we all learn the hard way. OpenAI fixed it quick, but prevention beats cure every time. For companies, bake in better consents, educate users, and test your features on actual humans. For us mortals? Read the warnings, use incognito modes for the juicy stuff, and make your AI conversation private (check out the prevention guide below) and remember: If it's too good (and free) to be true, it might just end up on Google. Regulators are gearing up for tougher AI laws, which is great – but ultimately, privacy's a team sport. Don't be the next headline. For the love of all things digital, think before you share. Your secrets will thank you.

Below is a practical guide on making your AI conversations private with some of the popular bots in the market.

Practical Prevention Guide: Making AI Conversations Private

Here are how to make your AI conversations private in some of the more popular bots -

ChatGPT

Enable Temporary Chat Mode:

- Open ChatGPT in your browser or mobile app

- Click the ChatGPT heading at the top of the page

- Select “Temporary Chat” option

- Conversations in this mode are not saved to your history or used for training

Disable Model Training:

- Click your profile icon in the upper right corner

- Select “Settings”

- Go to “Data Controls”

- Toggle off “Improve the model for everyone”

- Your conversations will still appear in history but won’t be used to train ChatGPT

Manage Shared Links:

- Go to Settings → Data Controls

- Click “Manage” for “Shared links”

- Review and delete any unwanted shared conversations

- Consider deleting all shared links if unsure about their privacy status

Claude

Profile Management:

- Click settings in the bottom left corner

- Select “Profile” to configure personal context

- Only add information you’re comfortable with Claude knowing across all conversations

- Leave blank to maintain maximum privacy

Conversation Isolation:

- Each Claude conversation is isolated by default

- No cross-conversation memory unless you explicitly reference previous chats

- Delete individual conversations using the trash icon

Project Organization:

- Use Projects to group related conversations

- Projects allow sharing context between selected conversations

- Keep sensitive topics in separate projects or individual chats

Perplexity

Incognito Mode (Limited Effectiveness):

- Click the incognito icon at the bottom left

- Note: This mode has limitations and may still recognize user context

- Use for general queries rather than sensitive information

Profile Settings:

- Click your profile icon at the bottom

- Review and minimize personal information in your profile

- Consider using generic location and professional information

Thread Management:

- Organize conversations into threads by topic

- Keep sensitive discussions in separate threads

- Regularly review and delete unnecessary conversation threads

Gemini

- Go to myactivity.google.com/product/gemini

- Click “Turn off” to disable activity logging

- Select “Turn off and delete activity” to remove existing data

- Note: Gemini is integrated with Google’s broader data ecosystem